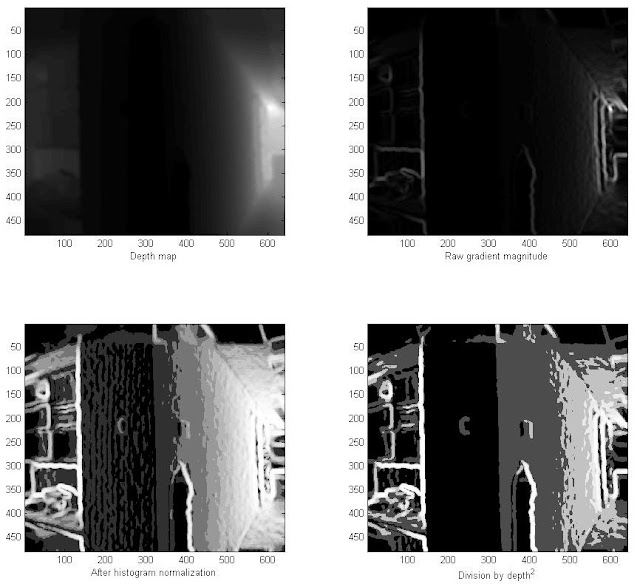

We were able to fix the average 3D surface normals assigned to superpixels. The following pictures show surface normal classification in our training set. The normals are divided into classes based on the their angle in the xy and xz planes.

1) Depth map with 3D surface normals overlayed

2) Per pixel surface normal classes (128 total classes)

3) Finescale superpixel segmentation

4) Finescale superpixel classes (128 total classes) with 3D surface normals overlayed

2) Per pixel surface normal classes (16 total classes)

3) Larger superpixel segmentation

4) Larger superpixel classes (16 total classes) with 3D surface normals overlayed

1) Depth map with 3D surface normals overlayed

2) Per pixel surface normal classes (128 total classes)

3) Finescale superpixel segmentation

4) Finescale superpixel classes (128 total classes) with 3D surface normals overlayed

1) Depth map with 3D surface normals overlayed

2) Per pixel surface normal classes (16 total classes)

3) Larger superpixel segmentation

4) Larger superpixel classes (16 total classes) with 3D surface normals overlayedOne observation in all of these is that there appears to be somewhat of a checkerboard pattern of the classes assigned to superpixels on a single surface (especially on the left wall of the second image).

This happens when the surface normal is right on the border between two classes.

For example, let's say the xy angle for class 1 is between 0 and 45, and the xy angle for class 2 is between 45 and 90. If we have a wall whose estimated surface normals have an xy angle that varies between 40 and 50, the surface normals are still pointing approximately in one direction, but it bounces back and forth between these two classes).

We should still be able to exploit the fact that certain classes are closely related and can be clumped together in the final segmentation process.